Table of Contents

You may have noticed lately our new metagame analysis article series that dives deep into the data of Marvel Rivals to help you navigate around the current meta. You can now check that out in real time as we update it each week below:

DotGG Premium members can see the latest week's report, which will be released to all players the following week. However, you can still read the latest analysis article to see a rundown of the stats.

Read below to read our explanation on how the data is collected and used.

How are heroes scored and weighted?

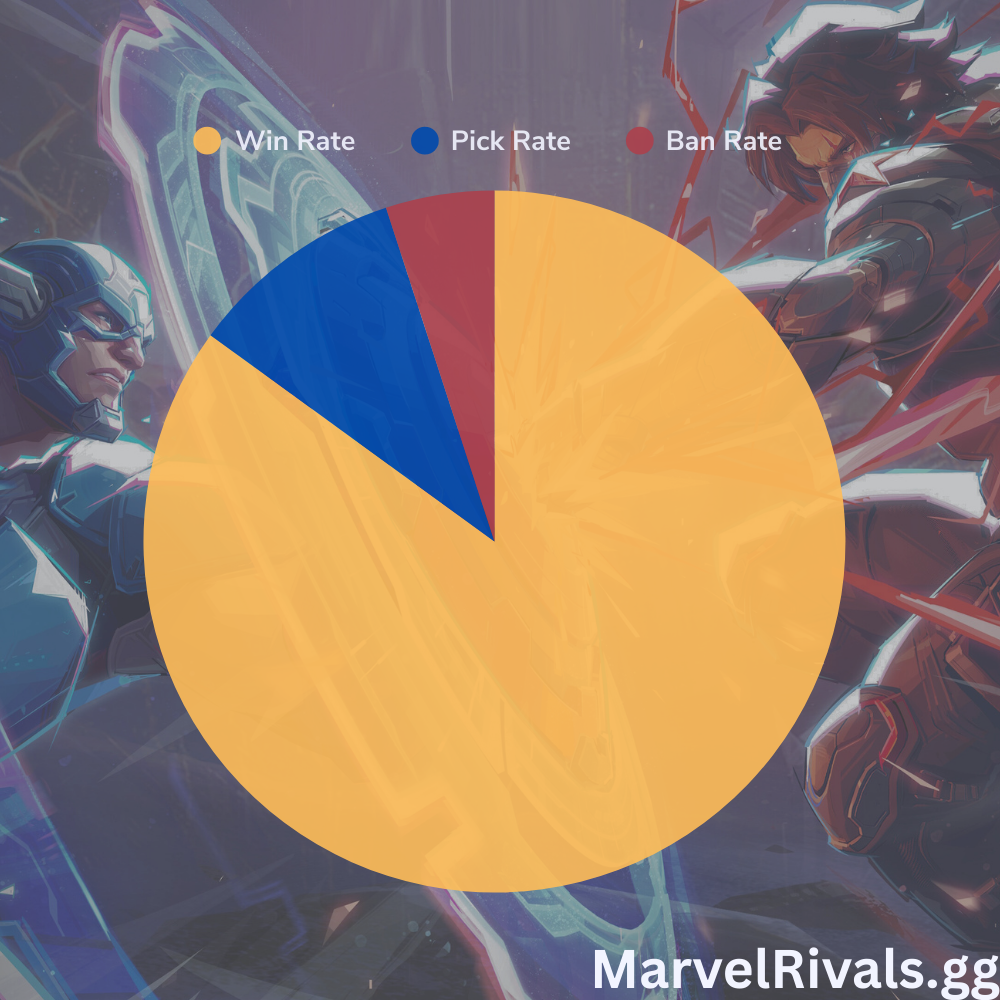

My analysis uses a simple weighted scoring system to evaluate hero performance. Data is centralized and snapshotted from multiple third-party tools. Win rate, pick rate, and ban rate are locked in for each hero at the start of the week, then again after seven days have passed. I then examine the shifts in performance and pass everything through a weighted scoring system.

Hero Rankings Weighted Scoring = (8.5x win rate) + (1x pick rate) - (0.5x ban rate)

The idea is simple. Win rate should be the most significant factor in ranking heroes. Pick rate is evaluated and factored in. How often players are picking certain heroes can be indicative of power or at least preference.

Why is ban rate penalized in the weighted scoring model? Aren’t heroes that are being banned more frequently more likely to be OP?

Ban rate can be a decent proxy for power level—or at least annoyance to play against. Unfortunately, it also works against you when selecting which characters to play and specialize in competitive. There is an opportunity cost to picking, practicing, and playing a character that is likely to get banned. In general, the more you play on a hero, the more you can expect to improve and for those improvements to be represented in your overall win rate with a hero. If you are unable to play your main over a third of competitive games you play, you are potentially hamstringing your performance and practice time with improving and mastering those heroes.

Another thing to note is that ban rate effectively reduces the pick rate of heroes. While a banned hero would not always have been picked for play in a game, the higher the ban rate, the more likely it is that a player who wants to cannot play that character in a match. Therefore, I’ve factored in a penalty for ban rate in my weighted scoring model. The lower the ban rate, the lower the penalty. Ban rate penalty currently represents 5% of a character’s weighted score.

Movers & Shakers

No adjustments were made to the Movers & Shakers weighted scoring formula this week.

This weighted scoring system looks at the change or delta (Δ) between the current week's data and the previous week's snapshot. This gives us a good trend of how heroes are progressing and allows us to identify the biggest winners and losers of the week. In this category, heroes who are winning more, being picked more, and being banned less will receive more of a lift. Heroes who are performing worse week over week will be ranked lower in this list.

The scoring system weights for this category must be different from base rankings. Hero win rates remain relatively stable week to week without a balance patch, but pick rates vary drastically as the meta evolves. Using the same weighted system for rankings would result in scores being almost entirely driven by changes to pick rate alone, which paints a severely skewed and flawed glimpse of actual performance.

Movers & Shakers Weighted Scoring = (20x Δwin rate) + (2x Δpick rate) - (3x Δban rate)

Once Mover & Shaker values are created, I run a z-score analysis to determine how many standard deviations (σ) each data point is from the mean of the set to look for statistical outliers and put everything on a comparable scale for analysis. This helps with machine learning and data smoothing/reliability over time.

The limitations of weighted scoring systems

With the amount of data to gather, compile, calculate, and analyze, it would require substantial effort to segment everything into different Elo brackets for ranked play. To overcome this limitation, I’ll provide callouts in my analysis for certain heroes who are performing well in various competitive rankings. For example, Hela is arguably the best DPS character in the game in Celestial+ lobbies, but is much less frightening in the hands of a Silver player. Less than 8% of the player base is competing in Celestial+ lobbies anyway, so an overall view of the competitive landscape and all its rankings is by default normalized across the entire distribution of the player base.

Improving the model over time

With community and pro feedback, I’ll improve the weighted scoring system over time. It’s likely this is not the optimal form, but it does give a good baseline to compare data over time and early shifts in the Marvel Rivals Season Two Meta. Any additional changes to the weighted scoring system will be called out in future articles in the series.

Note on Team-ups: Due to the nature of some third-party data capture tools, some Team-Ups that involve more than two characters are split into individual pairs while others are captured by the full set. This creates some issues with data accuracy, which I’ll work to smooth out in future articles in the series through my own tooling and possible improvements in third-party tools.